Hey, I'm Roy Naquin

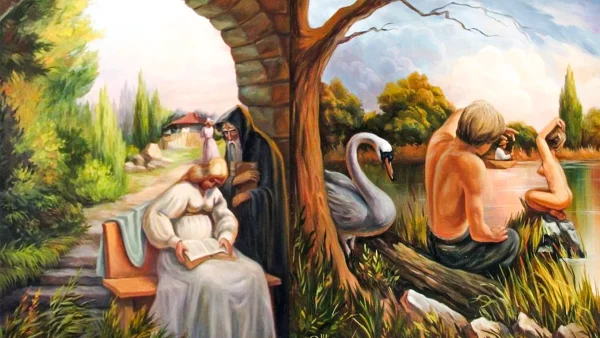

I'm fascinated by understanding how people think and operate under complex, abstract, high-pressure situations. My work explores human cognition, creativity, and perception of interactions across various situations and domains.

Let's explore together! Sign up for my newsletter.